AI-UX: Chat-bot Intelligence Gradient Mismatches

Users will assume high-intelligence for bots that are incapable of it, resulting in high abandonment rates. This provides an opportunity to produce products that are genuinely smarter.

Several examples of chat-bots and “smart products” are explored below, with low and high-intelligence responses shown. This helps determine how these products are approaching interactions with users, how well they explain their capabilities, and what they might be expected to do. Conclusions are offered at the end for how designers and PMs could raise the bar on intelligence in their products, and what future smart products might behave like.

Example: Alexa Chat Request

Observations:

• Open-ended questions are dangerous. If your bot asks them, users could literally put in anything. This bot walks right into the problem by offering a lot, while being capable of very little.

• Offering suggestions might work for new users who are doing basic exploration. However, suggestions will need to be highly contextually aware, if they are going to work for existing users that are already starting a task. Bots are representatives of their companies and products, and should be held to the same (or better) standards than their own human staff. No human support staff would offer suggestions as unrelated to the customer’s stated goal, as is shown in the example above.

• Alexa doesn’t know the basics of its own infrastructure. It can tell you what the ‘everywhere’ speaker group is in principle, but it can’t tell you why it’s not working or not accessible from other music apps.

• Alexa doesn’t even attempt to partially answer some questions. It just says it “doesn’t know”, which is pretty embarrassing because it’s a valid question about itself. No human support staff would say this, instead they would refer the question to an expert.

Example: Chase Support Chat Request

Observations:

• It seems pretty confident about its ability to answer a broad range of questions.

• It clearly has a highly constrained number of tasks or topics it can discuss, and it attempts to push the user down those paths. However, this is not what the user wants to do. This is not a call center.

• Chat-bots (and the companies that operate them) should be cautious of wasting customers’ time. Either the bot needs to explicitly mention the topics it can discuss (Microsoft CoPilot specifically tells you to ask only coding questions), or it needs to have a reasonable breadth of knowledge to offer.

• This issue is not isolated to chat-bots. Many times I have tried using the “support-chat” feature of a web site (which connects to a human operator) only to find out that the action I need completed can only be done by phone support. This type of “siloed support” systems just wastes customer’s time and decrease satisfaction levels. The chat-bot should be able to handle everything itself, except for possibly the most dangerous or delicate matters. For anything it can’t do, it should be able to package up a highly-detailed request, and automatically submit it directly to the right person for prompt resolution.

• The lack of any method of providing chat-bot feedback in the screen above, shows a lack of interest in pleasing customers. There are no ‘thumbs-up’ or ‘down’ on these responses, so it seems Chase won’t be able to learn what needs improvement, or how to fix it.

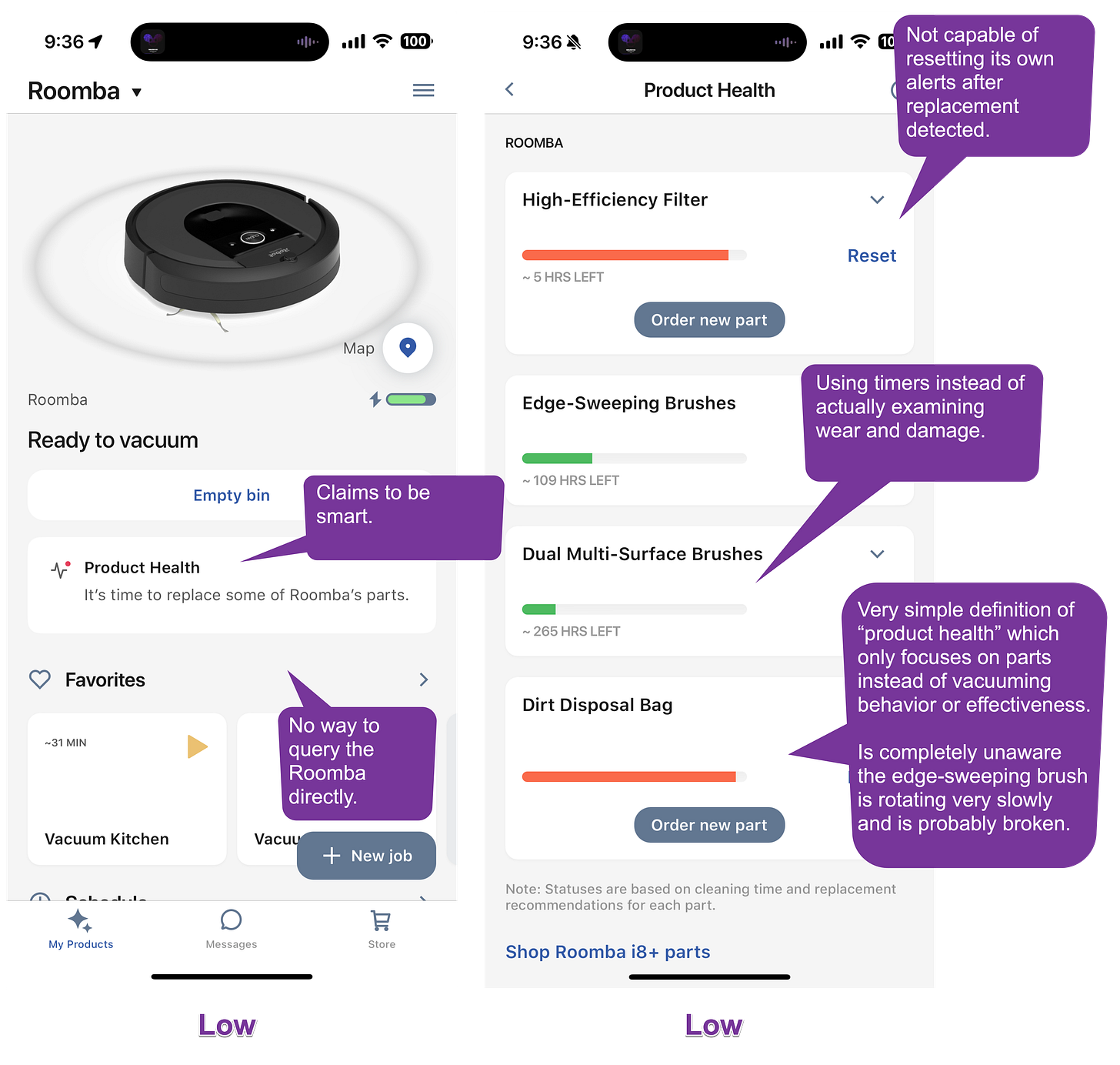

Example: Roomba iRobot Vacuum Intelligence

Observations:

• The product claims to be smart and to be able to monitor its own “health”.

• The full extent of it’s “self awareness” appears to be the time it thinks is remaining for installed parts. However, it may be unaware of manual replacements the user did, and components that failed early, or components the dog gnawed on. This product seems more focused on ongoing revenue from ordering parts, than an actual understanding of health.

• Other health problems such as “squeaking noises”, or “brushes not rotating”, or “consistently getting stuck under the couch” seems to be outside of its awareness. In short, the robot doesn’t know when it is not doing a good job.

• There is no way to talk to this robot. Having a “talk to me” button in the app might be helpful, but only if it was actually self-aware and competent. It’s not a stretch to imagine a “talk to me” button on the exterior of the vacuum itself, so that when it got stuck you could immediately ask it why.

Conclusions

High Expectations: Users are rapidly getting used to high levels of intelligence in chat systems and products in general. Users don’t want to create support tickets, call people to explain problems, or read documentation. They want it resolved quickly, directly and competently. They don’t have time for either human or automated support staff that don’t rapidly resolve their problems.

Raise The Bar on Smartness: Many products that claim to be smart are actually at the low end of the intelligence spectrum. We probably need a set of criteria which we can judge smart products on. Some of these might be: ability to check current state of the product, troubleshoot the product, know current configuration settings, make changes to configurations, understand available functions, and be aware of limitations. It should also be able to automatically create a support request based on prior troubleshooting attempts, user goals, and diagnostics.

Don’t Be A Know-Nothing: “Sorry I don’t know that” isn’t a great user experience. Increasingly it will be unacceptable when relating to domain knowledge where it should be an authority (e.g. Roomba should know its own parts and status).

Give Your Product A Voice: Many product managers and designers don’t realize that customers should be able to chat with it yet. Want to ask your phone why it’s making noises? Want to ask your Roomba why it doesn’t avoid the couch it gets stuck under? Want to ask your Alexa to fix a speaker configuration for you? Want to ask Chase about pros and cons of investing options? All of these things should be possible, and the app or robot needs some kind of method of easily talking to it.

The chat-bot doesn’t necessarily need a name, or to be personified in any way, but there should be a “talk to me” button on every app and robot exterior. In the example of Microsoft, it seems logical that there would be a “Microsoft Corporate” persona (which would be similar to their existing PR department), and an “Excel” persona (that would focus more on that product), but perhaps one AI could represent both simultaneously. Using real human names for these personas is likely to just confuse matters - it’s better to know you’re talking to an AI, and also what it is competent to talk about.Training Data Will Matter: Users will expect bots do understand who they are, what their personal configuration is, account details, and information on products they own. This will mean that the LLM will need to be trained on this data, or have it externally accessible for fast queries.

Give Your Bot Agency: Users won’t just want to ask what is wrong with their current configuration - they want it fixed. It is probable that the AI should ask permission or confirmation prior to doing some actions, but it should be able to go do them (and undo them) automatically for the user.

An End To Documentation: Users don’t want to read your docs. Docs are long, poorly organized, not focused on the user’s exact scenario, and require too much of the users time. They are also not intelligent. “Documentation” will become an internal database that the AI is trained on, or can access, and the interface to it will be an AI chat. Don’t tell the user to read a section of the docs, or follow a generic set of instructions that may not apply to their situation. Instead, give the user just the instructions they need to get to their goal.

Scale Down Expectations If Needed: If it really isn’t possible to use an LLM that can handle a broad range of questions, this needs to be explicitly communicated to the user. Possibly initially having the user choose a mode, prior to entering in a question might be useful. More needs to be done than offering suggestions - there needs to be a clear definition of scope constraints and a list of supported commands.